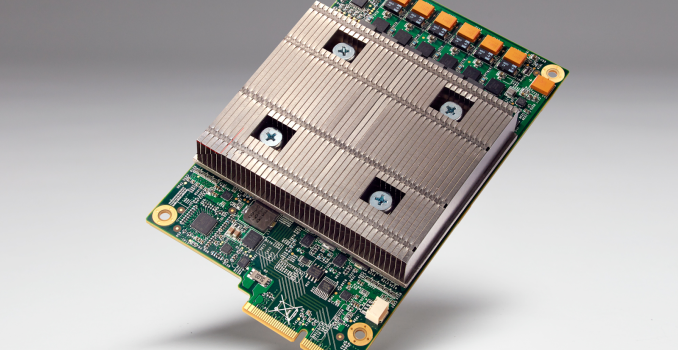

Google’s Tensor Processing Unit: What We Know

If you’ve followed Google’s announcements at I/O 2016, one stand-out from the keynote was the mention of a Tensor Processing Unit, or TPU (not to be confused with thermoplastic urethane). I was hoping to learn more about this TPU, however Google is currently holding any architectural details close to their chest.

More will come later this year, but for now what we know is that this is an actual processor with an ISA of some kind. What exactly that ISA entails isn't something Google is disclosing at this time – and I'm curious as to whether it's even Turing complete – though in their blog post on the TPU, Google did mention that it uses "reduced computational precision." It’s a fair bet that unlike GPUs there is no ISA-level support for 64 bit data types, and given the workload it’s likely that we’re looking at 16 bit floats or fixed point values, or possibly even 8 bits.

Reaching even further, it’s possible that instructions are statically scheduled in the TPU, although this was based on a rather general comment about how static scheduling is more power efficient than dynamic scheduling, which is not really a revelation in any shape or form. I wouldn’t be entirely surprised if the TPU actually looks an awful lot like a VLIW DSP with support for massive levels of SIMD and some twist to make it easier to program for, especially given recent research papers and industry discussions regarding the power efficiency and potential for DSPs in machine learning applications. Of course, this is also just idle speculation, so it’s entirely possible that I’m completely off the mark here, but it’ll definitely be interesting to see exactly what architecture Google has decided is most suited towards machine learning applications.