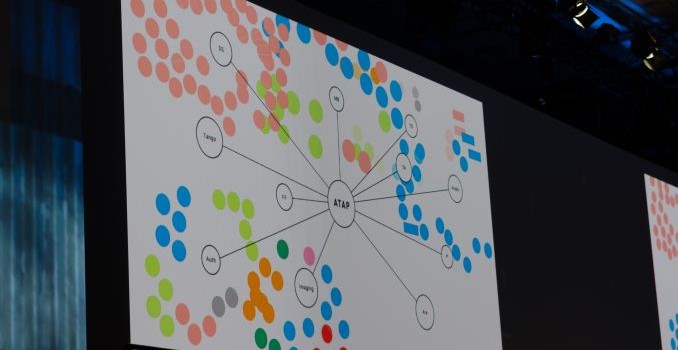

Today at Google IO, Google’s Advanced Technologies and Products (ATAP) group went over some of the things that they’ve worked on in the year since the previous IO. There are a few different things that they’ve worked on, but the most immediate and important announcements were centered on technologies to enable wearable technologies. These two announcements were Project Soli and Jacquard, both focused on solving the challenges involved with input on wearables. Although wearables are on the market today, one of the biggest problems with Android Wear and most wearables in general is that an almost purely touch-based interface means that almost any touch target has to take up a huge part of the display in order to make sure that it’s possible to reliably hit the touch target.

Google’s ATAP group noticed that this limitation is partially due to processing limitations of the brain, which varies for different parts of the body. Something like the elbow has very little sensation and cannot be finely placed. ATAP’s research indicates that the fingers by comparison has 20 times the processing bit rate, and therefore can be used to provide precise input.

For ATAP, the logical conclusion to this was Soli, a new single-chip solution for detecting finger motion and gestures. Soli is fundamentally a different approach to conventional solutions like capacitive touch screens which lack the ability to detect 3D motion and camera movement detection systems like Kinect which cannot accurately detect fine motion. The solution here is said to be 60 GHz radio, which has a sufficiently high frequency that it is relatively reflective with sufficient resolution to distinguish fine motion like a capacitive touch display. It was also said that the transmitter has a wide cone-like spread rather than scanning with a fine beam of radio waves, which helps to drive down cost. The current solution uses two transmitters and four receivers, which presumably helps with improved noise rejection and accurate reading of multiple fingers. Given the extremely high frequency, it’s also a pretty fair bet that we’re looking at a superheterodyne architecture but unfortunately I was unable to get a confirmation on this from ATAP representatives.

With this hardware, and a lot of proprietary algorithms that leverage machine learning/deep neural nets, it becomes possible to track extremely fine gestures. Something as simple as tapping your index finger with your thumb could be equivalent to tapping a button, and swiping your thumb against your index finger could be equivalent to scrolling on a touch screen.

Clearly, there’s a ton of possibilities with such technology, and probably the most obvious one is using this to enable a seamless experience with wearables like Google Glass, which suffers from poor user interface as it relied on head motion and touching the glasses itself to navigate the UI. ODG had an interesting finger-mounted controller, but it was obvious that it would be easily lost and was inherently somewhat clunky due to the number of controls that had to be integrated. The demos tended to focus on smartwatches, but my experience with the Apple watch suggests that the addition of Force Touch and the digital crown in addition to the touchscreen really resolves most of the problems that come with small display of smart watches. The real benefits really start to appear when in the context of other types of wearables.

The other technology that ATAP unveiled was named Jacquard, which is a bit less impressive from a pure technology perspective but definitely interesting from a manufacturing perspective. In short, Jacquard is a way of weaving capacitive touch digitizers into cloth.

According to Google, there were two major challenges in enabling Jacquard. The first was that pre-existing conductive yard had incredibly high resistance at 15 ohms per meter while 22 AWG copper wire has a resistance of .053 ohms per meter, or around 280 times higher, which makes it incredibly difficult to implement a good touch display of any significant size. In addition, there was only a single color. The other problem was that no one had attempted to try and deeply integrate so many wires in a fabric before, and the ATAP team faced many challenges when it comes to connecting the wires to logic once they’ve been woven into the fabric to actually make the touch screen useful.

To solve the problem with connecting a touch panel to the fabric, Google managed to get a special type of weaving done in order to expose the conductive strands of yarn in a specific order to allow fast and simple connection to connectors. In order to solve the problem of high-resistance yarn, ATAP made their own type of yarn to enable this technology with a low resistance that is only around twice as high as 22 AWG copper wire, and can be made in almost any color or material.

Although there are effectively no details on the actual process, which is likely to be a trade secret, it is said that this cloth works without issue in pre-existing textile machines. To demonstrate this, they showed off a tailored jacket made with Jacquard touch-sensitive material to prove that this technology can seamlessly blend with fashion. The result is that it becomes possible to have much larger touch panels on wearable devices due to their effectively invisible nature. Given that Levis has already partnered with ATAP for this project, I suspect that this is closer to commercialization than Soli. Overall, these two projects clearly solve user experience problems in wearables, but it remains to be seen whether they can make these prototypes into mass-market products.